Shadow AI: The New Unseen Risk Every Company Must Manage

Best Practices

Dec 23, 2025

What is Shadow AI?

You already know shadow IT: tools that teams adopt without approval from IT or procurement.

Shadow AI is that problem, upgraded with more risk and less visibility.

Shadow AI is the use of AI tools and AI features without formal approval, accountability, or oversight from your company.

That includes:

Standalone tools like ChatGPT, Claude, Copilot, Gemini

AI features buried inside existing software

Browser extensions that read everything on the page

Personal or “free” AI accounts used for company work

Employees reach for these tools because they want leverage. They paste contracts into chatbots to summarize them, upload CSVs to write board decks, and connect inboxes to AI assistants that promise “inbox zero.” None of those tools went through vendor review. Legal has not approved their data handling. Security does not know they exist.

The result is simple: your most sensitive information is flowing into a set of systems that you do not track, do not control, and often cannot audit.

That is shadow AI.

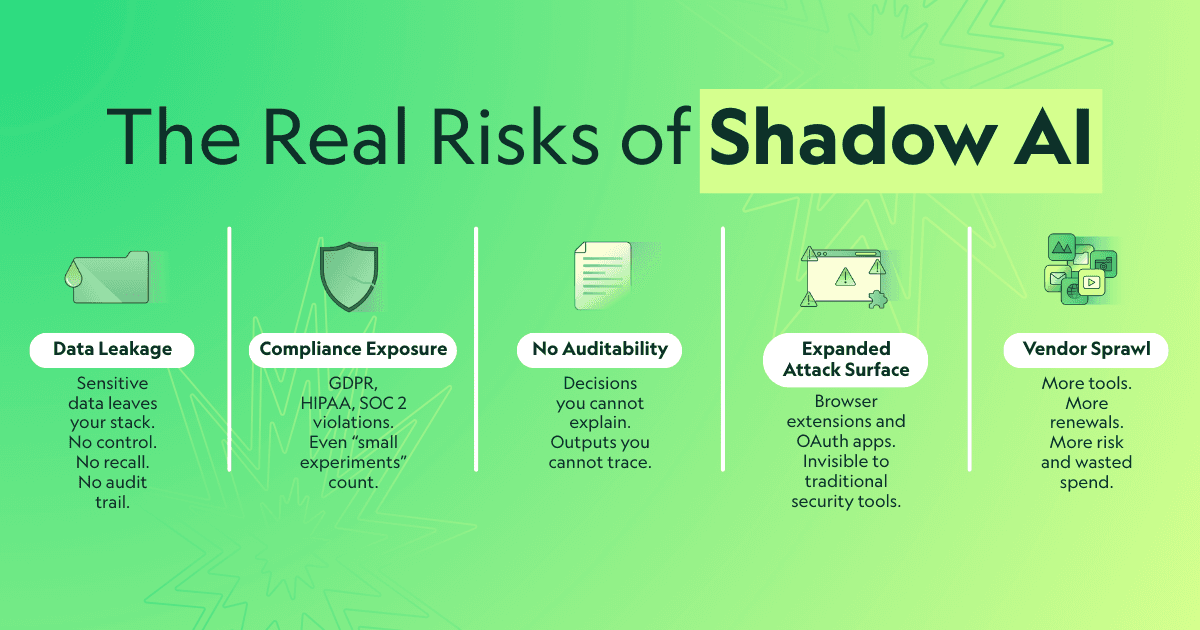

The risks that come with shadow AI

Shadow AI is not a vague “future concern.” It creates very specific, very current risks for finance, IT, security, legal, and compliance.

1. Data leakage and IP loss

When someone pastes a customer list, source code, pricing strategy, or MSA into a random AI tool, that information leaves your stack. In many cases:

You do not control how long it is stored

You do not have clarity on where it is stored

You cannot confidently delete it

You do not have a clean record of what was shared

That is permanent, untracked data leakage. Sometimes it is company confidential information. Sometimes it is regulated data. Sometimes it is the secret sauce that actually differentiates your business.

2. Compliance and regulatory exposure

Shadow AI lives in the blind spots of every framework your company claims to follow:

GDPR and global privacy regulations

SOC 2, ISO 27001, PCI, HIPAA

You can have a polished security questionnaire response and still have employees piping personal data into AI tools that never passed a single security review. Regulators and auditors will not care that the usage was “just an experiment.” Once data leaves your environment in a way you cannot trace, your risk posture changes.

3. No audit trail and opaque decision-making

AI is already influencing real decisions: vendor selection, pricing, forecasts, customer communication, and even policy language.

When those workflows involve unapproved AI tools, basic questions become hard to answer:

Who used which system to generate this content

Which model processed which data set

What was the prompt, what was the context, and is there a record

If your board, auditors, or regulators ask you to reconstruct how a particular decision was made and the answer is “someone typed something in a chatbot somewhere,” that is a problem.

4. A much larger attack surface

Shadow AI often arrives as:

Browser extensions with broad permissions

OAuth apps connected to Gmail, Slack, Drive, and other core systems

“Free forever” SaaS with minimal security posture

These tools extend your attack surface in quiet, creative ways. They can read cookies, scrape screens, save documents, and transmit content to third parties without any central logging. Security teams that only look at the big, well-known AI vendors are missing a huge portion of the real risk.

5. Vendor sprawl and wasted spend

Every AI helper, plugin, copilot, and “smart” feature represents:

A vendor relationship

A contract or clickwrap agreement

A data processing and compliance profile

A potential renewal commitment

Most companies already struggle to track normal SaaS renewals. Now add a fast-growing layer of AI tools that show up on corporate cards, get adopted by one team, and never see a formal review.

Shadow AI is vendor sprawl with higher stakes and an unknown price tag.

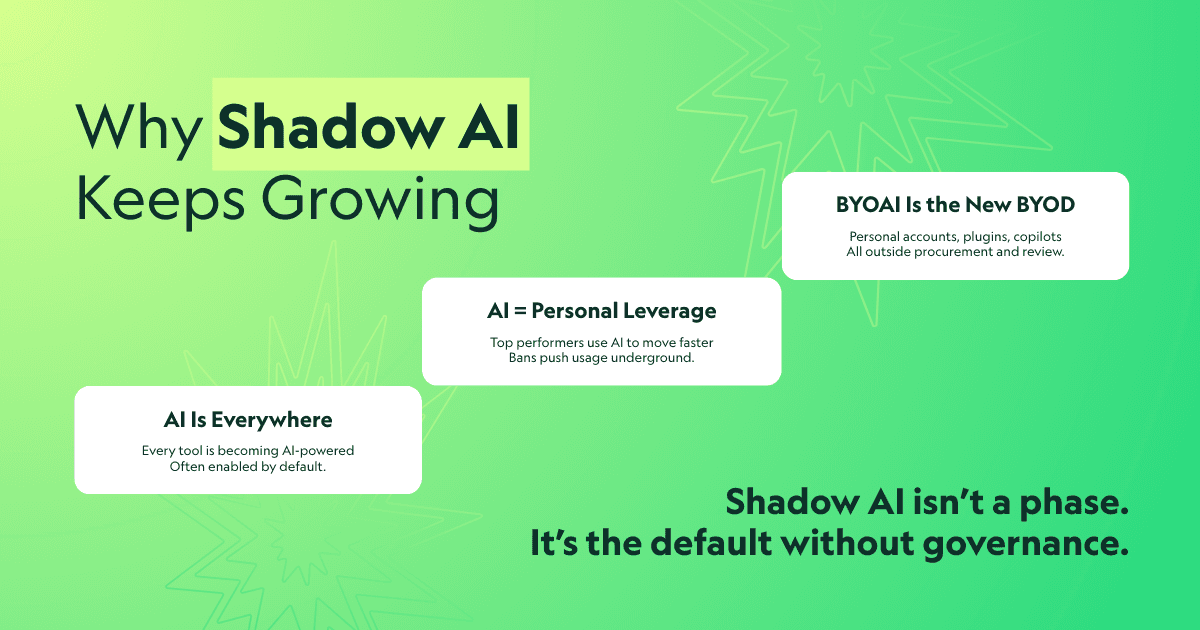

Why shadow AI is only going to grow

You cannot block list your way out of this trend. The incentives go the other direction.

A few forces are driving this:

1. AI is getting embedded into everything

Even if you never sign a contract with a standalone AI vendor, your stack is incorporating anyway. Productivity suites, CRMs, HR tools, project management platforms, design tools, browsers, and developer tools all ship AI copilots and “assist” features. Many are enabled by default or pushed by sales.

That means your existing vendors are quietly becoming AI vendors.

2. AI equals personal leverage

The employees you want to keep are the ones looking for leverage. They use AI to:

Write faster

Analyze data more quickly

Draft contracts and policies

Prepare leadership updates and investor memos

If your only stance is “do not use these tools,” they will either ignore the policy or go somewhere that respects their desire for leverage. Shadow AI thrives in organizations that say “no” without providing a better “yes.”

3. BYOAI is the new BYOD

People already bring their own devices, cloud accounts, note-taking apps, and side tools. Now they bring their own AI stack too:

Personal AI accounts used for work

AI email and calendar assistants connected to company inboxes

Screenshot and transcription tools that upload internal meetings to external services

Every one of those tools operates outside your procurement and compliance processes unless you intentionally pull them into the light.

Shadow AI is not a passing phase. It is the default state when you do not have intentional governance and vendor visibility.

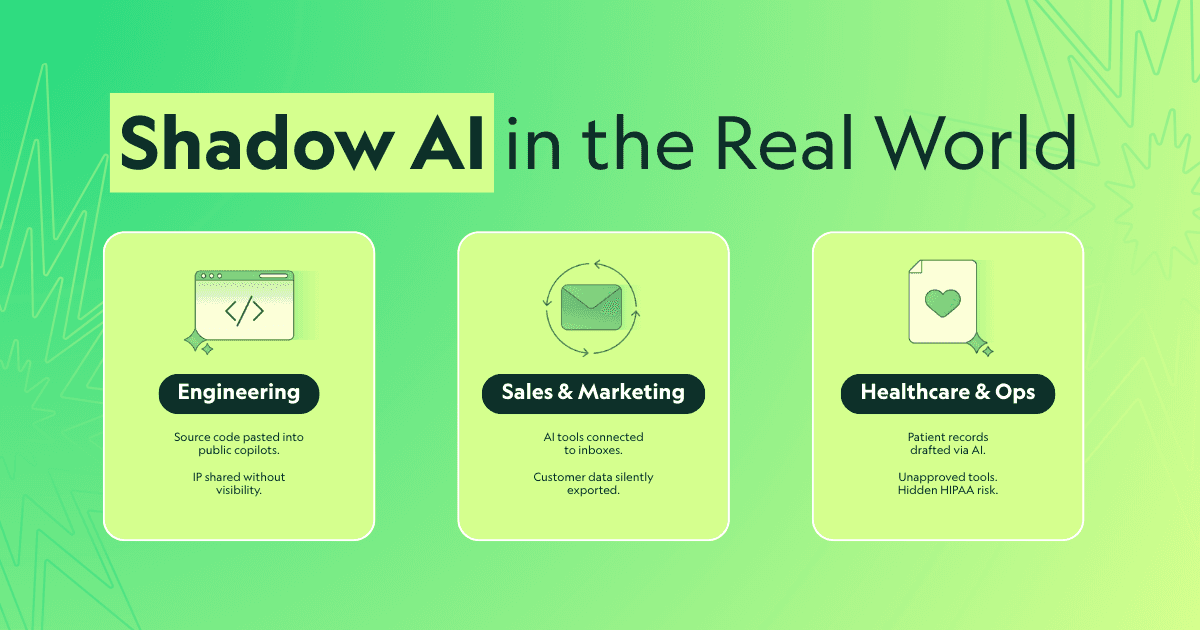

Real-world shadow AI examples

If this still feels abstract, it helps to look at real patterns that keep showing up across industries.

Engineers and public copilots

An engineering team starts leaning on a popular coding assistant. No one runs a vendor review, because it is “just a plugin in the IDE.” Over time, engineers paste entire core services into the assistant for debugging and ask it to refactor key algorithms. The model provider now has access to critical IP, and the company has no reliable record of what was shared. (IBM)

Sales teams and AI email writers

A sales rep signs up for an AI email writing tool with a company card. The tool asks to connect to the rep’s inbox to “personalize outreach.” It slurps in entire email histories, attachments, and customer-level conversations. No one in finance, security, or legal has evaluated the vendor. Yet the tool now has a complete view of customer communication and sensitive commercial information. (The Hacker News)

Healthcare and documentation assistance

Clinical staff or administrators use general-purpose AI tools to draft visit summaries or letters to insurers. They paste in full patient records or screenshots from the EMR. Even if names are removed, the combination of fields can still be considered personal or regulated health information. The AI vendor has not signed any healthcare compliant agreement. The organization now has invisible HIPAA exposure. (HIPAA Journal / Medical Economics)

Browser extensions and quiet data collection

A designer, marketer, or analyst installs an AI browser extension that offers “instant insights” or “page summaries.” The extension reads the DOM of every page, including internal dashboards, deal rooms, internal admin panels, and product backends. That content is sent to a third party. IT may never know, because no contract exists and nothing goes through procurement. (The Hacker News)

These stories are not rare. They are day-to-day behaviors in most companies that have not yet put real AI governance and vendor intelligence in place. (IBM)

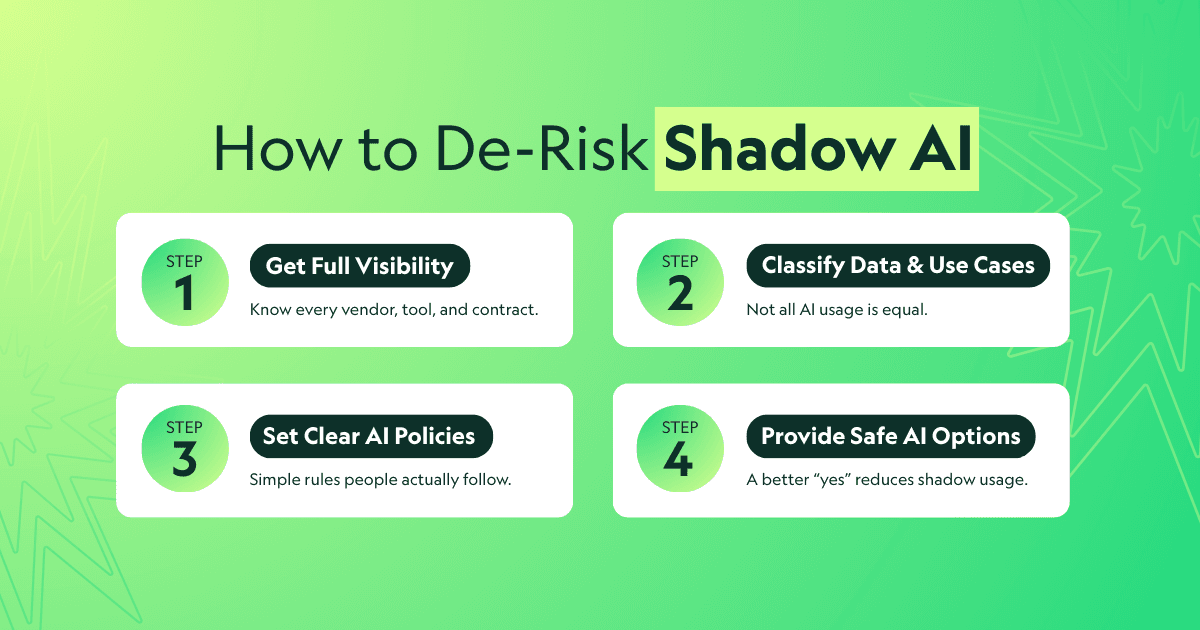

How to de-risk your company around shadow AI

You do not need to outlaw AI. Honestly, you really won’t be able to even if you wanted. So you do need a plan that gives you visibility and control.

Here is the shape of that plan.

1. Build a real inventory of vendors and tools

You cannot govern what you cannot see. Start with an honest inventory that answers:

Which vendors do we pay

Which contracts reference AI, machine learning, or data processing in a meaningful way

Which tools include AI features that touch sensitive data

Which tools are clearly AI products that entered as “small experiments”

If your only inventory is a spreadsheet someone updates “when they remember,” you already know it is incomplete.

Traditionally, assembling a complete, ever-evolving list of vendors and tools is a herculean task in and of itself, but this is an instance where AI-native tools, like BRM, can do the work for you. AI to combat AI sprawl.

2. Classify your data and AI use cases and add that into your compliance steps for onboarding

Not all AI usage is equal. Drafting generic content is low risk. Feeding a raw customer ledger to an unvetted chatbot is high risk. You need:

Clear data categories, including what is regulated, what is confidential, and what can be treated as low sensitivity

A simple mapping of AI use cases to allowed, restricted, or prohibited zones

Alignment across finance, IT, security, legal, and compliance on this framework

When that framework exists, real decisions become easier. A tool is not simply “good” or “bad.” It is appropriate or inappropriate for specific types of data.

Many BRM customers have found that the best way to incorporate this into their vendor process is through AI compliance requirements. By using BRM, they can leverage BRM’s trained SuperAgents to find each vendor’s AI compliance information and track any changes that may happen.

3. Write AI usage policies that humans can follow

A 12-page policy in legalese will not solve shadow AI. Employees need plain language answers:

What can I put in a public AI tool?

What must stay inside approved systems?

Which AI tools are approved for my team?

How do I request a new one?

If the policy cannot be summarized in one page for non-lawyers, you do not have a policy that will change behavior.

4. Give people safe, sanctioned AI options

If there is no safe “yes,” people will create their own. The fastest way to reduce shadow AI is to:

Provide approved AI tools with clear boundaries

Integrate AI directly into systems where people already work, under your governance

Explain why these options are safer, and what they allow

You will not eliminate experimentation, but you will channel most of the energy into systems that your company actually understands and can review.

How to stay ahead of shadow AI instead of chasing it

Shadow AI is not a one-and-done project. New tools appear every month, existing vendors ship new AI features, and teams continue to look for shortcuts.

In practice, staying ahead requires three capabilities that run continuously:

A live map of vendors and tools

Continuous monitoring of contracts, policies, and compliance data

Intake and renewal workflows that enforce your rules without constant manual policing

Here is how those look in a mature environment.

1. Continuous vendor discovery

A static spreadsheet or a one-time “vendor audit” cannot keep up with AI-driven change. You need an always-on approach that:

Connects to ERP, card providers, AP, HRIS, IDP, and email

Automatically detects new vendors, tools, and AI services

Links each vendor to the contracts, invoices, users, and business units behind it

This is the base layer. Without it, every other AI governance initiative sits on guesswork.

2. Contracts that are actually readable and actionable

Knowing that you pay “Vendor X” is not enough. You need intelligence about:

Which products and AI modules you have actually purchased

How each vendor can use your data

What they can do with training and retention

Where data is stored and who their subprocessors are

That information usually lives in buried PDFs. Extracting it manually is slow and painful. If you want real control over shadow AI, you need contract intelligence that turns fine print into searchable, structured data.

3. Intake workflows that bake in AI governance to Compliance

Most shadow AI starts as “just try this tool for a month.” Intake is where you either catch that or miss it.

Intake that helps you win:

Lives where people already are, not in a forgotten portal

Routes requests through finance, legal, IT, and compliance according to your rules

Captures why the tool is needed and what data it will touch

Checks for overlapping tools and existing capabilities

Integrates AI policy checks automatically, including questions about AI features and data usage

When that structure exists, the default behavior is safe, even for busy teams that do not think of themselves as part of procurement.

4. Renewal management that treats AI risk as a first-class signal

Renewals are where risk and waste both compound. A contract that was low risk two years ago might now be high risk because the vendor introduced AI features or changed data usage terms.

Renewal management that keeps you in control:

Tracks every renewal date in one view

Surfaces spend, usage, and contract details for each vendor

Flags AI related risks and changes in terms

Highlights consolidation opportunities and duplicate tools

That is how you stop paying for AI powered vendors that quietly work against your risk posture.

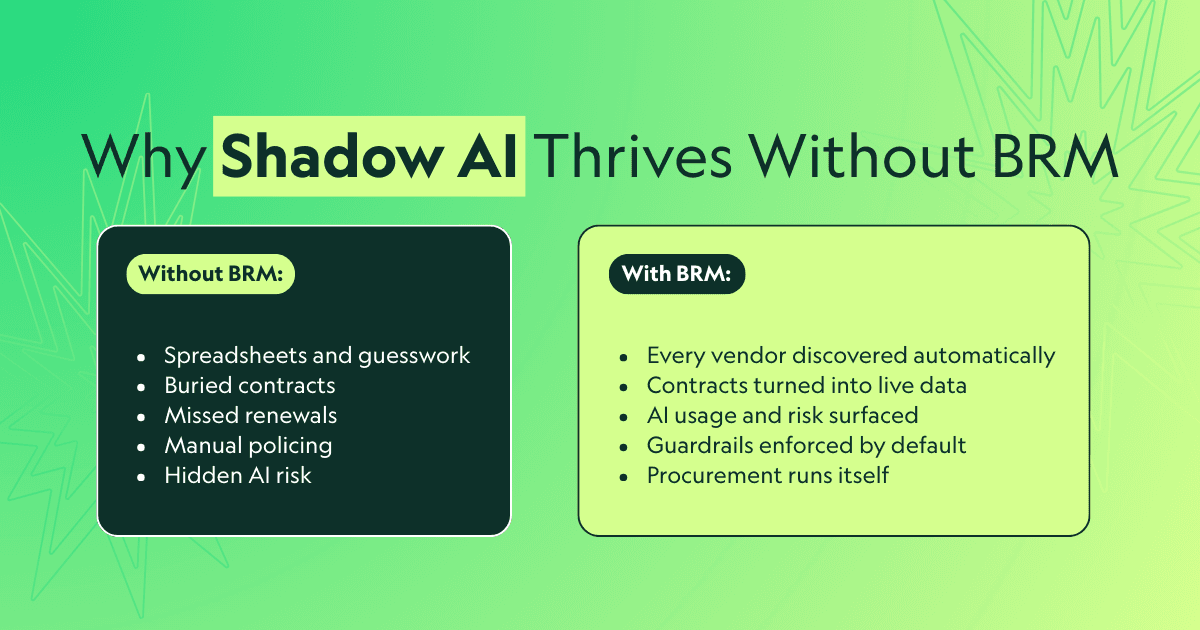

How BRM helps you control shadow AI without scaling headcount

This is exactly the world BRM is built for.

BRM is not another CLM, spend tracker, or approval form. It is your AI-powered procurement and vendor management team that finds every vendor, reads every contract, and manages every request, so you get clarity and control without turning your job into spreadsheet gymnastics.

Here is how BRM lines up against shadow AI.

1. Total vendor clarity, including the tools no one remembered to log

From the moment you connect BRM, AI superagents scan your environment and uncover:

Vendors from ERP, AP, and card data

Contracts from inboxes and drives

Tools inferred from usage and spend

The result is one clean vendor record for each relationship. You see true cost, ownership, utilization, and key terms in one place. You can also identify vendors and tools that involve AI usage, instead of guessing.

2. Contract intelligence that highlights AI risk automatically

BRM turns buried PDFs into structured, live data. It extracts:

Renewal dates and notice periods

Pricing and commercial terms

Product and module breakdowns

Security, privacy, and data usage language

That is exactly where AI shows up in your risk profile. With BRM, legal, finance, and IT do not have to dig. They can simply ask BRM’s AI assistant questions about data rights, training clauses, or processing regions and get precise answers based on your actual contracts.

3. Intake that enforces guardrails without slowing the business

BRM gives you intake that takes care of itself.

Requests are captured in one place

Approvals are automatically routed to finance, legal, IT, and compliance based on your rules

BRM agents gather vendor security documents and compliance data

AI-related questions and policy checks are built in

You are not chasing Slack threads and email chains. You are not playing traffic cop. The process runs in the background while you focus on bigger strategic decisions.

4. Renewal guardrails for AI and non-AI vendors

BRM keeps renewals from turning into “surprise charges” or silent extensions of risky AI relationships.

Live renewal calendar across all vendors

Automatic notifications before notice periods

Context on usage, overlapping tools, and contract history

A clear view of which vendors pose AI or compliance risk

Customers have already seen dramatic savings by catching renewals and consolidating tools. Add AI risk into that picture, and the value is even larger: fewer zombie contracts, fewer unvetted AI tools, and better negotiation leverage.

5. An AI assistant that keeps AI questions inside your own walls

With BRM, every screen comes with an AI assistant trained on your vendor data, your contracts, and your policies. Your team can ask:

Which vendors have the right to train on our data

Which contracts reference generative AI or machine learning

Which tools touch PII or PHI

Which AI tools will renew in the next quarter

They get immediate, accurate answers without pasting sensitive documents into external chatbots. That is how you give employees the power of AI, while keeping governance and visibility where they belong.

Conclusion

Shadow AI is not going away. You can either let it grow quietly in the corners of your stack, or you can own it.

Owning it means:

Knowing every vendor, contract, and tool you pay for

Understanding where AI lives in those relationships

Baking compliance and AI policies into every request and renewal

Giving your team AI leverage in ways that respect your risk posture

BRM exists to make that possible for lean finance and ops teams that do not want to hire an army of procurement and compliance staff.

Vendors already have playbooks. It is time buyers had one too.

Want to learn more about BRM? Click below to speak with an expert.

Book a Demo